Accelerated AI and ML Workloads refer to the use of specialized hardware accelerators, such as GPUs (Graphics Processing Units) or TPUs (Tensor Processing Units), to enhance the performance of artificial intelligence (AI) and machine learning (ML) tasks.

Challenges & Requirements

Accelerated AI and ML workloads often require high bandwidth memory to keep up with the massive amounts of data being processed. There's a need for memory architectures that can deliver higher bandwidth to match the computational capabilities of modern accelerators. This could involve innovations in DRAM design, such as wider memory buses, faster memory interfaces, or the integration of high-bandwidth memory (HBM) technologies.

AI is a data-intensive application that generates and processes large amounts of data. SMART Modular's NVDIMM, persistent memory with SafeStor™ encryption, dramatically accelerates system performance for logging, tiering, caching and write buffering, making it an ideal memory solution for AI applications. NVDIMMs are an ideal solution for dramatically accelerating system performance for AI and ML applications.

SMART's DRAM Solutions

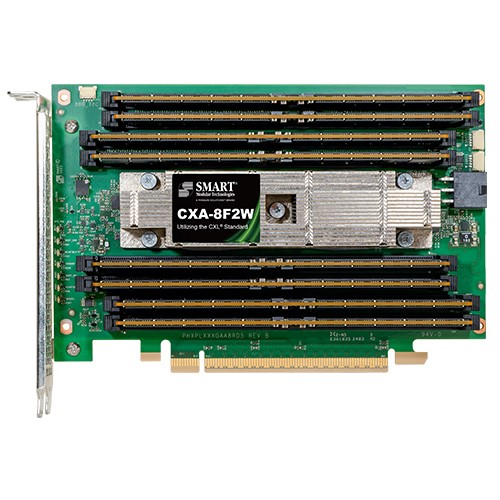

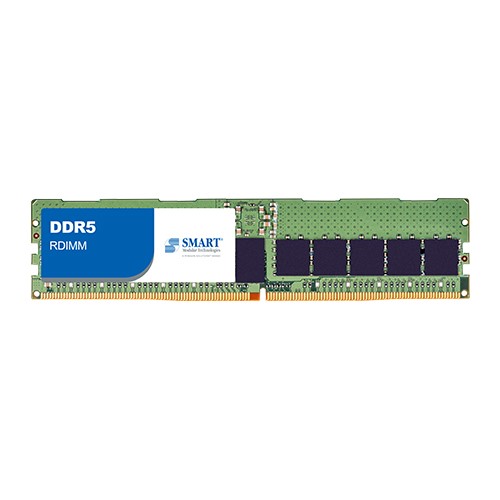

- CXL 8-DIMM AIC - SMART’s CXA-8F2W is an 8-DIMM AIC utilizing the CXL standard. It’s a Type 3 PCIe Gen5 Add-In Card (AIC) consisting of eight DDR5 RDIMMs mounted on a Full Height, Half Length PCIe form factor module. It uses two x16 CXL controllers to implement two x8 CXL ports capable of a total bandwidth of 64GB/s.

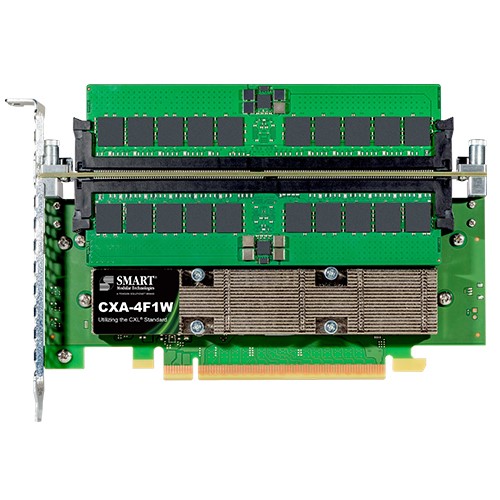

- CXL 4-DIMM AIC - SMART’s CXA-4F1W is a 4-DIMM AIC, a Type 3 PCIe Gen5 Add-In Card (AIC) utilizing the CXL standard consisting of four DDR5 RDIMMs mounted on a Full Height, Half Length PCIe form factor module. It uses one x16 CXL controller to implement a single x16 CXL port capable of atotal bandwidth of 64GB/s.

- DDR5 Zefr ZDIMM - ZDIMMs (Zefr Memory Module) are rigorously tested to eliminate over 90% of memory reliability failures, ensuring maximum application uptime and optimizing memory subsystem reliability. ZDIMMs utilize SMART’s Zefr™ proprietary screening process, ensuring the industry’s highest levels of uptime and reliability.

SMART's Flash Solutions

- DC5820 Data Center SSDs - SMART’s DC5820 PCIe Gen5 NVMe Data Center SSDs are designed to meet the increasing demands placed on storage systems in Hyperscaler, Hyper-converged, Enterprise, and Edge data centers. The DC5820 Data Center SSD Family delivers industry-leading KIOPs/Watt performance with superior Quality of Service (QoS) across mixed application workloads.